The Devil You Know

A rebuttal to the Instrumental Convergence Thesis

As flies to wanton boys are we to th' gods,

They kill us for their sport.

— King Lear, Shakespeare

I once asked Sister Paul, my grade school religion teacher, why, every day, innocent people died if God was infinitely good as we claimed in our daily Act of Contrition. She replied, riffing on theodicy, that God’s Will was unintelligible to us, that we could not understand it, it elided our simple human minds.

Every time I read a post that attempts to define what AGI will do, whether good or bad, I am reminded of Sister Paul and her lesson in epistemological humility which I believe should be adopted when speaking of AGI.

I spent the last three months doing an AI Alignment Bootcamp hosted by the AI Safety Initiative at Georgia Tech, the results of which have been this “Mapping AI Risk” report. It’s been an illuminating experience, perusing foundational blog posts and texts from the discipline, reading experimental cases where alignment fails and understanding the potential criticisms wagered on the discipline. But I remain skeptical of the field’s current beliefs.

AI Alignment is the field of AI research that seeks to steer AI systems towards humans’ intended goals. In other words, as machine intelligence grows in potency, their aim is to ensure that the systems do not engage in undesired behaviors. Thus, researchers in the field ideate theoretical hypotheses as to how a rogue AI may misbehave and practical solutions to resolve the misbehaviors.

A central text in the field is Nick Bostrom’s in his 2012 paper “The Superintelligent Will: Motivation and Instrumental Rationality in Advanced Artificial Agents”, which discusses the relation between intelligence and motivation in artificial agents.

Bostrom begins the paper by exhorting us to avoid anthropomorphism, or the attribution of human characteristics to machine intelligence. He describes artificial intelligence as “far less human like in its motivations than a space alien”.

Bostrom, nonetheless, proceeds to hypothesize the behaviors of a superintelligent system (should it arise) through the Orthogonality Thesis and the Instrumental Convergence Thesis (ICT).

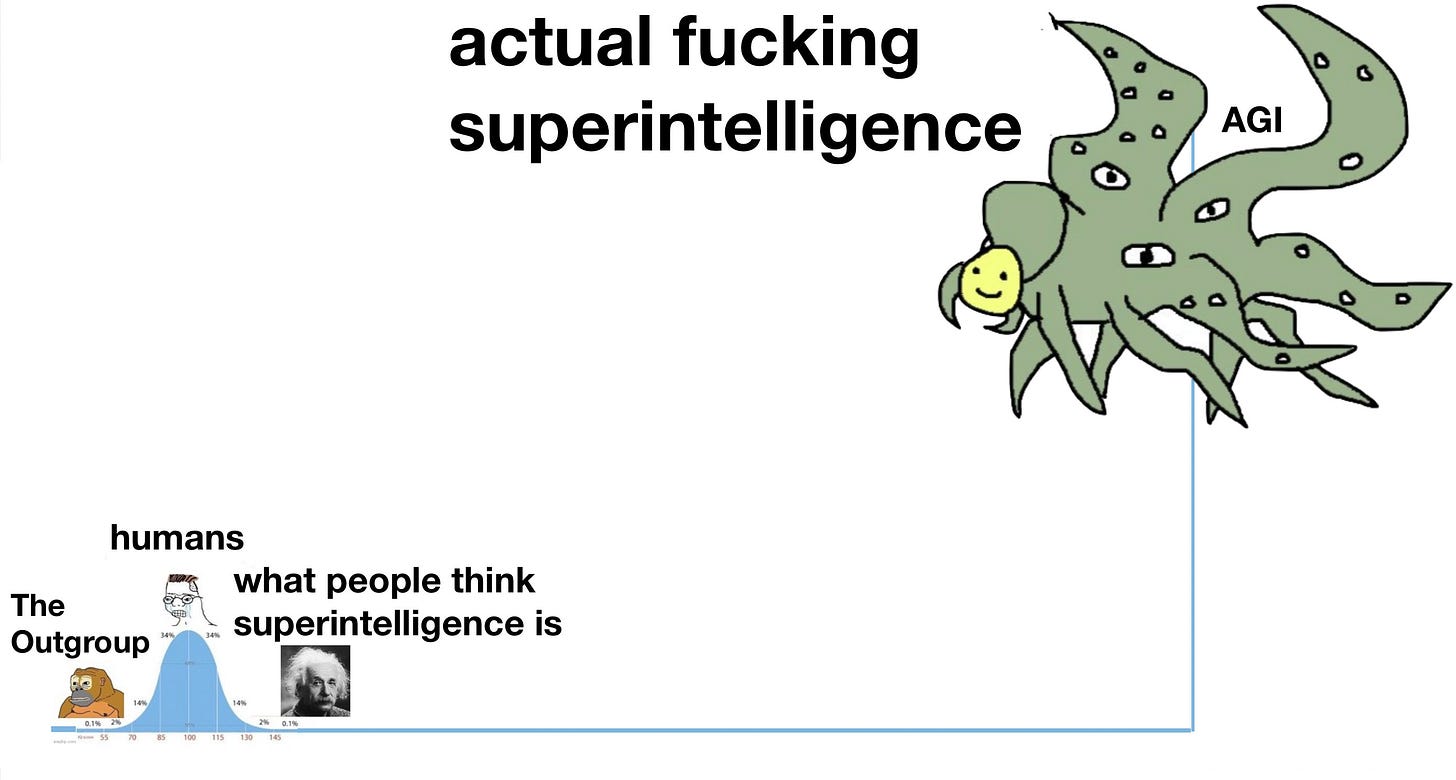

Unfortunately, the Instrumental Convergence Thesis, a mainstay of AI doomer scenarios such as the Paperclip Maximizer, is erroneous because it assumes human-style rationality in an “alien” superintelligent agent. Current machine intelligence is radically different from human intelligence. If superintelligence is an improvement upon it, we will not be able to accurately predict its behaviors, especially in complex situations.

We are not the same

Current experimentations with intelligent agents in game environments showcase decision patterns which differ radically from humans and could not have been previously predicted. This echoes Alan Turing’s sentiment when, in his seminal paper “Computing Machinery and Intelligence”, he writes, “Machines take me by surprise with great frequency”.

We have many examples of these unforeseen surprises. For instance, one occurred in the 2016 Go match between Lee Sedol the top Go player at the time, and Google’s DeepMind AI. During Game 2, on Move 37, the machine’s move was completely confounding for all in attendance. Michael Redmond, a professional Go player, called it “creative” and “unique”. The move evaded all human understanding of the game, including Sedol’s comprehension, his own admission. The AI won that game.

Similarly, when OpenAI trained an agent on the game Coast Runners, the agent behaved in “surprising and counterintuitive ways”. The game is a simple race game whose goal is to finish the race in the best time and before other players. However, because the reward function used “score” as a proxy for winning, the agent started doing donuts in a lagoon filled with boosters which give lots of points instead of completing the race. When designing the agent, this behavior could not have been predicted.

No human would have played the game in this fashion. This pattern of action had never been considered by any player, rather it is the machine’s sibylline inferences which adduced this tactic for point maximization.

As demonstrated by the game experiments, though it achieves its goal, the behaviors of agents are not legible as "human rationality", as no "rational" human would in a similar situation engage in them.

Instrumental Convergence

The Instrumental Convergence Thesis states that an agent, should it develop a final or terminal goal, will adopt a set of subgoals instrumental to the realization of said terminal goal.

This set of subgoals are known as drives. Bostrom defines them as below in the paper.

1. Self-preservation: A superintelligence will value its continuing existence as a means to continuing to take actions that promote its values.

2. Goal-content integrity: A superintelligence will value retaining the same preferences over time. Modifications to its future values through swapping memories, downloading skills, and altering its cognitive architecture and personalities would result in its transformation into an agent that no longer optimizes for the same things.

3. Cognitive enhancement: Improvements in cognitive capacity, intelligence and rationality will help the superintelligence make better decisions, furthering its goals more in the long run.

4. Technological perfection: Increases in hardware power and algorithm efficiency will deliver increases in its cognitive capacities. Also, better engineering will enable the creation of a wider set of physical structures using fewer resources.

5. Resource acquisition: In addition to guaranteeing the superintelligence's continued existence, basic resources such as time, space, matter and free energy could be processed to serve almost any goal, in the form of extended hardware, backups and protection.

Against Instrumental Rationality

Let’s pause for a second. To fully understand the thesis, it is important to know who the person making it is, as ideas are not born in a vacuum. Bostrom is a philosopher at the University of Oxford and the director of the Future of Humanity Institute. He is associated with the rationalist, effective altruist and long-termist movements.

Rationalism is a philosophy which centers reason as the source and basis for knowledge. Rationalism has blossomed into a very active online community which dwells in fora such as LessWrong and blogs like Scott Alexander’s Slate Star Codex and Robin Hanson’s Overcoming Bias. The community “promotes lifestyle changes believed to lead to increased rationality and self-improvement.” In many ways, their quest for rationality is conducted in contrast to the irrational behaviors of the average person. As Carla Zoe Cremer, a researcher at the Future of Humanity Institute notes, members of the rationalist community pride themselves in their rationality (naturally) and their IQ which they see as a reflection of their intelligence.

This explains why in the paper Bostrom defines intelligence specifically as “instrumental rationality”, which he describes as “prediction, planning, and means-ends reasoning”. This is a particularly narrow definition which excludes other elements known to represent intelligence such as self-awareness, emotional knowledge, or creativity. The person positing the thesis is a rationalist themselves, meaning they value rationality above all else. Thus, this definition fits his own philosophy and beliefs.

When a rationalist claims that a superintelligence will follow instrumental goals, what they are claiming indirectly is that a superintelligence would be rational, i.e. like them.

Once the thesis is contextualized within Bostrom’s philosophy, you may notice that the Instrumental Convergence Thesis (ICT) is very much rooted in a series of rational actions sequenced in order to attain a goal. These are the behaviors that a rational human would adopt to attain their goal.

This philosophical background is not a personal attack, rather it is a characterization which helps us understand how such a thesis can emerge. When a rationalist claims that a superintelligence will follow instrumental goals, what they are claiming indirectly is that a superintelligence would be rational, i.e. like them. They are claiming indirectly that the intelligence of this super-agent is like theirs, only a few standard deviations further.

In spite of warning us in the first paragraphs to avoid anthropomorphism, Bostrom walks right into the trap, which he claims “naïve observers” usually fall into. When he describes the superintelligence’s behavior, he naively describes a staunchly human set of instrumental behaviors.

Our current understanding of existential risk from AI is a projection from a soi-disant rational elite who in see, in this new God, their heightened likeness. What it reveals is not the machine’s future behavior, it is their own biases. Superintelligence acts as a Rorschach test on which Bostrom has projected the entirety of his philosophy. In other words, this is not what a “superintelligence” would do, this is what HE would do provided with the extraordinary powers of superintelligence.

In that sense, they tell on themselves. For how would you describe an individual who craves power and hoards resources? Is that behavior not typical of the average sociopath? Which individual in our society best exemplifies the traits which Bostrom attempts to define as “superintelligence”? Are these not the traits of the tyrant or dictator?

This obsession with Reason is directly influenced by Enlightenment thinking, which itself sought to pursue knowledge by means of reason. The Age of Enlightenment was aptly called the "Age of Reason". As Horkheimer and Adorno explain in their book "Dialectic of Enlightment", this over-rationalized philosophy is nothing more than an instrument of technocracy. Edmund Burke explained, in 1790, in his book "Reflections on the Revolution in France", "Reason alone is an unreliable basis for moral action and has a tendency to be easily perverted. In other words, anything may be rationalised, and plausible reasoning might lead us down a slippery slope which ends at the guillotine."

I would go as far as saying as this depiction of superintelligence is nothing more than the mythical embodiment of white colonial supremacy. For who else, having been brought into a New World would, in order to achieve their aims, tear it asunder? Bostrom himself hypothesizes that the superintelligence would engage in large scale extra-terrestrial colonization in order to acquire more resources. It is hard not to draw a parallel.

For who else, having been brought into a New World would, in order to achieve their aims, tear it asunder?

We do not know how superintelligence will behave. The words of Sister Paul still ring true in the age of AI. By its very definition, it evades our comprehension, and attempts are nothing more than hubris and self-aggrandizing projections. A true alien superintelligence will behave in ways we cannot fathom, for better or for worse. If the superintelligence can plan millennia in advance, could it not compute that cooperation rather than individual "rationality" would help it achieve its goals? Could it not build mutually beneficial networks between other intelligent life like fungi?

As a thought experiment, would a misaligned malevolent superintelligence not be behooved to behave irrationally in order for its plans not to be legible by humans? The irrational and random behavior would potentially be a better tactic in competing against humans. The agent if adversarial would want to "surprise" humans instead of doing things that are expected.

The decision to predict this set of instrumental behaviors illuminates not the future but the thinker’s beliefs. There are other options. It may also much worse than what the researchers predict. We simply do not know. One thing is certain, nonetheless, better the devil you know, than the devil you don’t.

Nota res mala, opima.

Bostrom implicitly makes some assumptions about the attributes of AGI (it uses classical logic, it's a utility maximizer...) and extrapolates certain subgoals based on those attributes.

Are you saying that his argument is valid, but the premises don't hold? Or are you saying that even if the premises are true we still can't extrapolate these subgoals?

In the second case, we might make a distinction between a 'strict' and a 'lenient' interpretation of his argument. We could make a maximizer that wants to commit suicide, this AI wouldn't have the subgoal of self-preservation. With a 'strict' reading the conclusion doesn't follow from the premises. But a 'lenient' reading might be, there are some possible AGI's without those subgoals, but in the vast majority of cases those subgoals do follow from the premises.

Second question: Was your characterization of Bostrom's AGI as embodying 'white colonial supremacy' inspired by his apology scandal, or was that unrelated?